背景

从最初无序的testing时代、到注重形式化的testing时代、到ET出现以后的ET1.0时代、ET1.5时代、ET2.0时代,测试人对“什么是测试”、“什么是探索性测试”有了愈来愈深刻的认识和理解,直到现在的ET3.0时代,在James Bach与Michael Bolton的引导下,我们已经开始主张消灭“探索性测试(Exploratory Testing)”这种不“科学”的说法,同时我们想消灭的那些不准确的测试用语还包括:“脚本化测试(Scripted Testing)”、“自动化测试(Automated Testing)”、“手工测试(Manual Testing)”等。

什么?这些我们已经如此熟悉和使用了很多年的词儿,都不使用了,为什么要这么做?那我们使用哪些词语来表述呢?

知道各位会有这些疑问,前不久,我特意整理了相关的ideas,并连续两期在“海盗派tester”微信群进行了直播,主题就是围绕着“Checking and Testing”进行一系列的探讨,希望通过对这些基本的测试概念的深入探讨,能引起各位测试同仁对软件测试的进一步深入思考,日常工作中使用正确的表达用语会有助于测试行业良性的健康发展,会有利于测试周边的人对测试这个行业建立起正确的认知,比如当人们说“自动化测试”时,仿佛测试是可以被完全自动化实施、因此测试可以更高效更快更节省人力成本,实际上,测试中只有一小部分的活儿可以完全被工具自动化地实施,大部分测试的工作也是最主要的部分,仍然需要有技能的测试人员来完成。

通过这些概念的澄清,也希望每个测试组织都愿意让他们的测试人员把时间和精力投入到那些值得做的、对产品质量提升有最直接益处的、better testing中去,而不是把大把的时间花在数测试用例的个数上、或者花在撰写大量的测试文档上、或者花在费尽心力地位领导准备各种测试统计数据的汇报上;希望每个tester都能意识到并开始关注自身测试思维和测试技能的提升,而不是一味地追求更高的甚至100%的自动化、学习更多的测试工具、或者向开发人员看齐努力提到写代码水平。

注意,我不是在说,测试人员不应该“学习写代码、使用测试工具、开展自动化检查、撰写测试文档、汇报测试统计数据、按照测试用例开展测试。。。。。。”

我想强调的是,不应该把上述这些内容当作测试的核心,虽然这些工作容易有可见性的效果,“看得见、可讨论”,可是使得缺陷真正得以暴露出来的是有技能的测试人员(skilled testers),而不是那些“形式化”的东西(testing formality)。尤其对于绝大部分的测试组织来说,应该以测试人员的能力为核心、依赖于测试人员专业的测试技能来发现有价值的缺陷,提高对产品质量的认知,这也是测试本身最有利的价值所在。

这一定是一场漫长之旅,就让我们先从认识checking和testing的区别开始吧。

FYI:

以下是本次解读的图片版、文字版和音频版,请按需取用。

checking and testing内容比较多,大多数都来自James Bach和Michael Bolton的blog、articles或者RST课程资料,我按照自己理解的脉络整理了这份资料,仅供各位参考之用。

Checking and Testing解读

图片版:

(可以在这里下载清晰图片版文件:http://www.taixiaomei.com/others10.php )

Checking vs Testing

- Organized By Xiaomei Tai Oct, 2017

http://www.satisfice.com/blog/archives/856

Testing and Checking Refined

Tools encroach into every process they touch and tools change those processes.

As Marshall McLuhan said “We shape our tools, and thereafter our tools shape us.”

We may witness how industrialization changes cabinet craftsmen into cabinet factories, and that may tempt us to speak of the changing role of the cabinet maker, but the cabinet factory worker is certainly not a mutated cabinet craftsman. The cabinet craftsmen are still out there– fewer of them, true– nowhere near a factory, turning out expensive and well-made cabinets.

there now exists a vast marketplace for software products that are expected to be distributed and updated instantly.

We want to test a product very quickly. How do we do that? It’s tempting to say “Let’s make tools do it!” This puts enormous pressure on skilled software testers and those who craft tools for testers to use. Meanwhile, people who aren’t skilled software testers have visions of the industrialization of testing similar to those early cabinet factories. Yes, there have always been these pressures, to some degree. Now the drumbeat for “continuous deployment” has opened another front in that war.

We believe that skilled cognitive work is not factory work. That’s why it’s more important than ever to understand what testing is and how tools can support it.

For this reason, in the Rapid Software Testing methodology, we distinguish between aspects of the testing process that machines can do versus those that only skilled humans can do. We have done this linguistically by adapting the ordinary English word “checking” to refer to what tools can do. This is exactly parallel with the long established convention of distinguishing between “programming” and “compiling.” Programming is what human programmers do. Compiling is what a particular tool does for the programmer, even though what a compiler does might appear to be, technically, exactly what programmers do. Come to think of it, no one speaks of automated programming or manual programming. There is programming, and there is lots of other stuff done by tools. Once a tool is created to do that stuff, it is never called programming again.

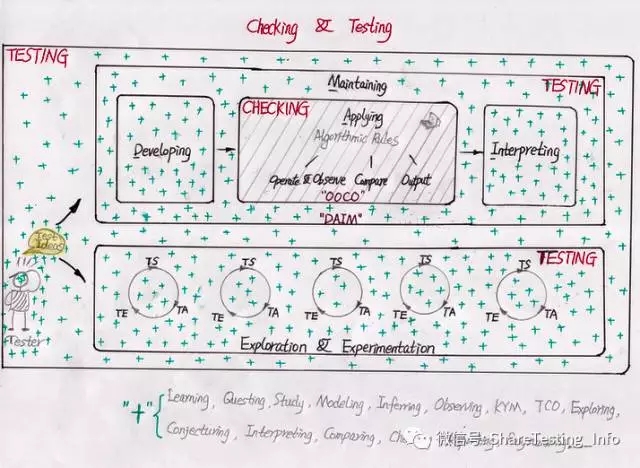

Testing is the process of evaluating a product by learning about it through exploration and experimentation, which includes to some degree: questioning, study, modeling, observation, inference, etc.

(A test is an instance of testing.)

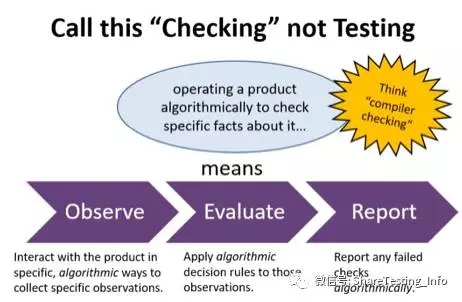

Checking is the process of making evaluations by applying algorithmic decision rules to specific observations of a product.

(A check is an instance of checking.)

1 What is checking?

Checking is the process of making evaluations by applying algorithmic decision rules to specific observations of a product.

Tags: algorithmatically, confirming

Checking is one part of the process of testing that can be done entirely by a machine; just like compiling is one part of the process of developing software that can be done entirely by a machine.

Tags: can be done, compiling, process

-

The point of this is to emphasize the role of the skilled tester in developing, maintaing, applying, and interpreting automated checks.

Tags: Priority 1, a cognitive skilled tester: developing, applying, interpreting, maintaining

-

Automated checks include operation and observation, comparing and outputing results automatically based on algorithms.

Tags: Priority 2, operate & observe, compare, output

Don't mistake automated checking with testing or with automated testing. Testing can not be automated.

(The point of this is to emphasize the role of the skilled tester in developing, maintaing, applying, and interpreting automated checks., Automated checks include operation and observation, comparing and outputing results automatically based on algorithms.)

Tags: Angry

-

A check is only a PART of a test; the part that CAN be automated.

Tags: Refresh, Pause

I think the word "test" have two different meanings based on its context. One is: a test is a test idea; the other is a test is an instance of testing, which also including experimenting part considerations.

But Michael thinks only the 2nd understanding is true.

(The Rapid Software Testing Namespace)

For me, 1st understanding, a test is sth we can talk about, sth we think, related with ideas; testing is sth we do, related with actions or activities.

For Michael and James, a test must be related with the experimental part, otherwise, it can not be called a test.

"An example is certainly not a test. (That's why there are different words for "example" and "test".) A real test is a CHALLENGE to a product." -- A test needs have its purpose related with testing.

2 What is testing?

Testing is the process of evaluating a product by learning about it through exploration and experimentation, which includes to some degree: questioning, study, modeling, observation, inference, etc.

Testing is inherently exploratory. All testing is exploratory to some degree, but may also be structured by scripted elements

If testing ISN'T exploratory, it's not really testing. That is, if we're not looking at new territory, answering new questions, making new maps of the product, performing new experiments on it, learning new things about it, challening it... If we're not doing those things, we're not really testing.

http://www.developsense.com/blog/2011/05/exploratory-testing-is-all-around-you/ :

“review”, or

“designing scripts”, or

“getting ready to test”, or

“investigating a bug”, or

“working around a problem in the script”, or

“retesting around the bug fix”, or

“going off the script, just for a moment”, or

“realizing the significance of what a programmer said in the hallway, and trying it out on the system”, or

“pausing for a second to look something up”, or

“test-driven development”, or

“Hey, watch this!”, or

“I’m learning how to use the product”, or

“I’m shaking out it a bit”, or

“Wait, let’s do this test first instead of that test”, or

“Hey, I wonder what would happen if…”, or

“Is that really the right phone number?”, or

“Bag it, let’s just play around for a while”, or

“How come what the script says and what the programmer says and what the spec says are all different from each other?”, or

“Geez, this feature is too broken to make further testing worthwhile; I’m going to go to talk to the programmer”, or

“I’m training that new tester in how to use this product”, or

“You know, we could automate that; let’s try to write a quickie Perl script right now”, or

“Sure, I can test that…just gimme a sec”, or

“Wow… that looks like it could be a problem; I think I’ll write a quick note about that to remind me to talk to my test lead”, or

“Jimmy, I’m confused… could you help me interpret what’s going on on this screen?”, or

“Why are we always using ‘tester’ as the login account? Let’s try ‘tester2’ today”, or

“Hey, I could cancel this dialog and bring it up again and cancel it again and bring it up again”, or

“Cool! The return value for each call in this library is the round-trip transaction time—and look at these four transactions that took thirty times longer than average!”, or

“Holy frijoles! It blew up! I wonder if I can make it blow up even worse!”, or

“Let’s install this and see how it works”, or

“Weird… that’s not what the Help file says”, or

“That could be a cool tool; I’m going to try it when I get home”, or

“I’m sitting with a new tester, helping her to learn the product”, or (and this is the big one)

“I’m preparing a test script.”

-

A script is something that puts some form of control on the tester’s actions.

-

In Rapid Software Testing, we also talk about scripts as something more general, in the same kind of way that some psychologists might talk about “behavioural scripts”: things that direct, constrain, or program our behaviour in some way. Manners, politeness, is an example of that.

-

We are not referring to text! By “script” we are speaking of any control system or factor that influences your testing and lies outside of your realm of choice (even temporarily). This includes text instructions, but also any form of instructions, or even biases that are not instructions.

-

In common talk about testing, there’s one fairly specific and narrow sense of the word “script”—a formal sequence of steps that are intended to specify behaviour on the part of some agent—the tester, a program, or a tool. Let’s call that “formal scripting”.

-

Much of the time, human testers DO NOT need very formal, very explicit procedures to do good testing work. Very formal, very explicit procedures tend to focus people on specific actions and observations. To some degree, and in some circumstances, testing might need that. But excellent testing also requires variation and diversity.

-

So a script, the way we talk about it, does not refer only to specific instructions you are given and that you must follow. A script is something that guides your actions.

-

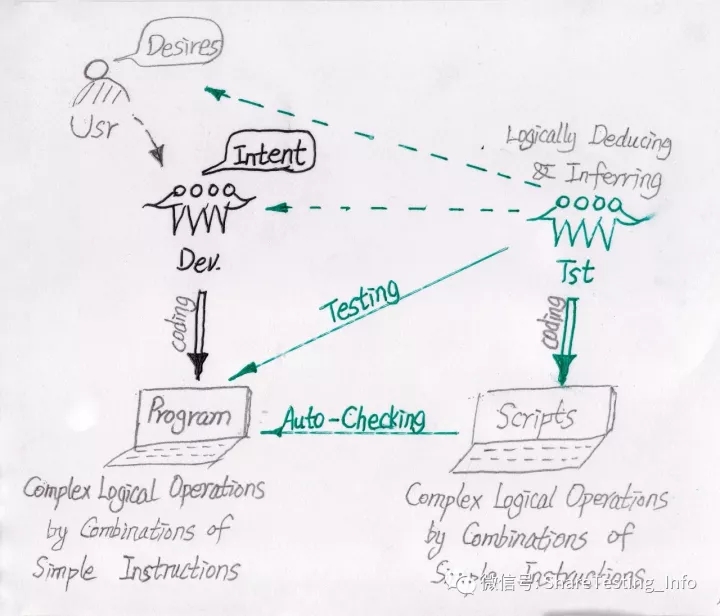

“complex logical operations occur through a combination of simple instructions executed by the computer and not by the computer logically deducing or inferring what is desired.”

Jerry Weinberg’s 1961 chapter about testing in his book, Computer Programming Fundamentals, depicted testing as inherently exploratory and expressed caution about formalizing it. He wrote, “It is, of course, difficult to have the machine check how well the program matches the intent of the programmer without giving a great deal of information about that intent. If we had some simple way of presenting that kind of information to the machine for checking, we might just as well have the machine do the coding. Let us not forget that complex logical operations occur through a combination of simple instructions executed by the computer and not by the computer logically deducing or inferring what is desired.”

test cases are not testing

-

Test Cases Are Not Testing.pdf

OUR INDUSTRY HAS A TROUBLING OBSESSION WITH TEST CASES. WRITING TEST CASES IS

mentioned in job descriptions as if it were the main occupation of testers. Testers who approach us

for advice too often use phrases like “my test cases” to mean “my work as a tester.”

One common idea of a test case is

that it is a set of instructions and/or data for testing some part of a

product in some way.

Considering the variety of things called test

cases around the industry, a definition that covers all of them would

have to be quite general. However, our concern in this article is mostly

with detailed, procedural, documented test cases, and the attitudes

surrounding that kind of test case.

Our claim is that even good test cases

cannot comprise or represent good testing.

Programmers write code. This is, of course, a simplification of what

programmers do: modeling, designing, problem-solving, inventing data

structures, choosing algorithms. Programming may involve removing or

replacing code, or exercising the wisdom of knowing what code not to

write. Even so, programmers write programs. Thus, the bulk of their

work seems tangible. The parallel with testing is obvious: if

programmers write explicit source code that manifests working

software, perhaps testers write explicit test cases that manifest testing.

But this becomes fertile ground for a vicious cycle: in some specific

situations, managers may ask to see test cases for some valid

engineering reason, and testers may deliver those cases; but soon

providing test cases becomes a habit,

Test cases are not evil. The problem is the obsession that shoves aside the true business of

testing. In too many organizations, testing is fat and slow.

We would like to break the obsession, and return test cases to their

rightful place among the tools of our craft and not above those tools. It’s

time to remind ourselves what has always been true: that test cases

neither define nor comprise testing itself. Though test cases are an

occasionally useful means of supporting testing, the practice of testing

does not require test cases.

In a test case culture, the tester is merely the medium by which test

cases do their work. Consequently, while writing test cases may be

considered a skilled task, executing them is seen as a task fit for

novices (or better yet, robots).

A common phrase in that culture is that

we should “derive test cases from requirements” as if the proper test

will be immediately obvious to anyone who can read. In test case

culture, there is little talk of learning or interpreting. Exploration and

tinkering, which characterize so much of the daily experience of

engineering and business, are usually invisible to the factory process,

and when noticed are considered either a luxury or a lapse of

discipline.

Testing is Not a Factory. Testing is a Performance.

Bugs are not “in” the product. Bugs are about the relationship between

the product and the people who desire something from it.

However, even if all imaginable checks are performed, there is no

theory, nor metric, nor tool, that can tell us how many important bugs

remain. We must test - experiment in an exploratory way - in order to

have a chance of finding them. No one can know in advance where the

unanticipated bugs will be and therefore what scripts to write.

Toward a Performance Culture

A performance culture for testing is one that embraces testing as a

performance, of course. But it also provides the supportive business

infrastructure to make it work. Consider how different this is from the

factory model:

• Testing Concept: Testing is an activity performed by skilled people.

The purpose of testing is to discover important information about the

status of the product, so that our clients can make informed decisions

about it.

• Recruitment: Hire people as testers who demonstrate curiosity, enjoy

learning about technology, and are not afraid of confusion or

complexity.

• Diversity: Foster diversity among testers, in terms of talents,

temperaments, and any other potentially relevant factor, in order to

maximize testing performance in test teams.

• Training: Systematically train testers, both offline and on the job, with

ongoing coaching and mentoring.

• Peer-to-peer learning: Use peer conferences and informal meetups

to build collegial networks and experiment with methods and tools.

Occasionally test in group events (e.g. “bug parties”) to foster

common understanding about test practices.

!......

test cases are not testing

a test case is not a test

a test case is some description of some PART OF a test

a test is what you think and what you do

a test is a challenge to a product

Testing is Not a Factory. Testing is a Performance.

-

I can’t think of another field that involves complex THINKING work that organizes *research* in terms of “cases”.

-

https://huddle.eurostarsoftwaretesting.com/resources/other/no-test-cases-required-test-session-chartering/

The same script, guides different testers to different agrees. Novices will be guided heavily, while experienced testers only take scripts as heuristics.]

-

We don't think in terms of "pass" or "fail"; we ask "would the behaviour or state of the product represent a *problem* for the customer, the end user?"

3 Relations

Testing encompasses checking (if checking exists at all), whereas checking cannot encompass testing.

Testing can exist without checking.

Checking is a process that can, in principle be performed by a tool instead of a human, whereas testing can only be supported by tools.

Check can be COMPLETELY automated, whereas testing is intrinsically a human activity.

Checking is short for fact checking; Testing is an open-ended investigation.

Checking is not the same as confirming. Checks are often used in a confirmatory way (most typically during regression testing).

but we can also imagine them used for disconfirmation or for speculative exploration (i.e. a set of automatically generated checks that randomly stomp through a vast space, looking for anything different).

4 What are the values of checking? (When do we need human checking and auto checking?)

perform hundreds and thousands of variations on the same basic pattern very quickly.

work without a break for hours and hours and hours.

repeated checking for many many times

When using script?

-

If an agent (a tester or a mechanical process) needs to do something specific, then a script might be a good idea.

-

If the intended actions of the agent can be specified before the activity, then a script might be a good idea.

-

If the agent performing the action would not know what to do unless told, OR if the agent might know what to do, but forget, then a script might be a pretty good idea.

-

If a script (and test cases are a form of script, in the sense that I described above) organizes information for more efficient, reliable access, then it might be a good idea.

-

If a script helps speed up design or execution or evaluation, it might be a really good idea.

5 What are the problems that checking can not help us to resolve?

One real problem with formal scripting is this: only a fraction of what a tester thinks and what a tester does can be captured in a script. Testing is full of tacit knowledge, things that we know but that are not told.

This is a problem with software products in general: the machine will not do what the human would do. When a tester is evaluating a product and encounters a problems with the instructions she has been given, she does NOT freeze and turn blue.

Human beings “repair” the difference between what they’ve been told to do and what they are able to do in the situation. Machines don’t do that without being programmed.

Here’s another real problem with giving a tester an explicit, narrow set of instructions to follow: the tester doesn’t learn anything or they learn very little, because the tester’s actions are guided from the outside.

The Problems of Verification

-

The Logic of Verification.pdf

-

Verification is about establishing whether something is true or false.

A check is a tool by which we verify specific facts.

Confirmation means to verify something that we assumed to be true.

Actually, you can verify a little more. From verifying that the product did something, you can verify that the product can work.

-

Validation is about establishing whether we feel okay about something’s quality; figuring out if we agree on an observation.

verb (used with object), validated, validating.

1.

to make valid; substantiate; confirm:

Time validated our suspicions.

2.

to give legal force to; legalize.

3.

to give official sanction, confirmation, or approval to, as elected officials, election procedures, documents, etc.:

to validate a passport.

6 clarification about ET and ST

http://www.satisfice.com/blog/archives/1509

Tags: ET3.0

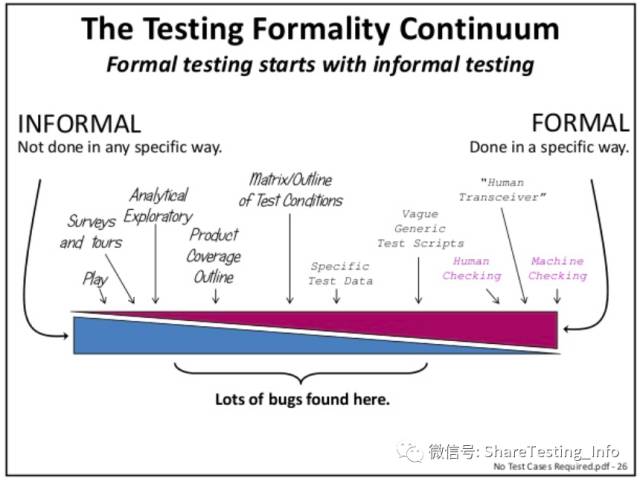

ET1.0: ~1995 Thus, the first iteration of exploratory testing (ET) as rhetoric and theory focused on escaping the straitjacket of the script and making space for that “better testing”. We were facing the attitude that “Ad hoc testing is uncontrolled and unmanageable; something you shouldn’t do.” We were pushing against that idea, and in that context ET was a special activity. “Put aside your scripts and look at the product! Interact with it! Find bugs!”

ET 1.5: Explication SBTM was intended to help defend exploratory work from compulsive formalizers who were used to modeling testing in terms of test cases.

ET 2.0: Integration exploratory-scripted continuum

-

This is a sliding bar on which testing ranges from completely exploratory to completely scripted. All testing work falls somewhere on this scale. Having recognized this, we stopped speaking of exploratory testing as a technique, but rather as an approach that applies to techniques (or as Cem likes to say, a “style” of testing).

-

We saw testing as involving specific structures, models, and cognitive processes other than exploring, so we felt we could separate exploring from testing in a useful way. Much of what we had called exploratory testing in the early 90’s we now began to call “freestyle exploratory testing.”

-

By 2006, we settled into a simple definition of ET, simultaneous learning, test design, and test execution.

-

proposed originally by Cem and later edited by several others: “a style of testing that emphasizes the freedom and responsibility of the individual tester to continually optimize the quality of his work by treating test design, test execution, test result interpretation, and learning as mutually supporting activities that continue in parallel throughout the course of the project.”

-

Exploration can mean many things: searching a space, being creative, working without a map, doing things no one has done before, confronting complexity, acting spontaneously, etc.

-

What the adjective “exploratory” added, and how it contrasted with “scripted,” was the dimension of agency. In other words: self-directedness.

-

We now recognize that by “exploratory testing”, we had been trying to refer to rich, competent testing that is self-directed. In other words, in all respects other than agency, skilled exploratory testing is not distinguishable from skilled scripted testing.

Tags: Red

-

The essence of scripted testing is that the tester is not in control, but rather is being controlled by some other agent or process. This one simple, vital idea took us years to apprehend!

-

In 2007, another big slow leap was about to happen. It started small: inspired in part by a book called The Shape of Actions, James began distinguishing between processes that required human judgment and wisdom and those which did not. He called them “sapient” vs. “non-sapient.” This represented a new frontier for us: systematic study and development of tacit knowledge.

-

In 2009, Michael followed that up by distinguishing between testing and checking. Testing cannot be automated, but checking can be completely automated. Checking is embedded within testing.

ET 3.0: Normalization Yes, we are retiring that term, after 22 years. Why?

Because we now define all testing as exploratory.

-

In 2011, sociologist Harry Collins began to change everything for us. It started when Michael read Tacit and Explicit Knowledge. We were quickly hooked on Harry’s clear writing and brilliant insight. He had spent many years studying scientists in action, and his ideas about the way science works fit perfectly with what we see in the testing field.

By studying the work of Harry and his colleagues, we learned how to talk about the difference between tacit and explicit knowledge, which allows us to recognize what can and cannot be encoded in a script or other artifacts. He distinguished between behaviour (the observable, describable aspects of an activity) and actions (behaviours with intention) (which had inspired James’ distinction between sapient and non-sapient testing). He untangled the differences between mimeomorphic actions (actions that we want to copy and to perform in the same way every time) and polimorphic actions (actions that we must vary in order to deal with social conditions); in doing that, he helped to identify the extents and limits of automation’s power. He wrote a book (with Trevor Pinch) about how scientific knowledge is constructed; another (with Rob Evans) about expertise; yet another about how scientists decide to evaluate a specific experimental result.

-

Are you doing testing? Then you are already doing exploratory testing. Are you doing scripted testing? If you’re doing it responsibly, you are doing exploratory testing with scripting (and perhaps with checking). If you’re only doing “scripted testing,” then you are just doing unmotivated checking, and we would say that you are not really testing. You are trying to behave like a machine, not a responsible tester.

-

ET 3.0, in a sentence, is the demotion of scripting to a technique, and the promotion of exploratory testing to, simply, testing.

History of Definitions of ET: http://www.satisfice.com/blog/archives/1504

1988 First known use of the term, defined variously as “quick tests”; “whatever comes to mind”; “guerrilla raids” – Cem Kaner, Testing Computer Software (There is explanatory text for different styles of ET in the 1988 edition of Testing Computer Software. Cem says that some of the text was actually written in 1983.)

1990 “Organic Quality Assurance”, James Bach’s first talk on agile testing filmed by Apple Computer, which discussed exploratory testing without using the words agile or exploratory.

1993 June: “Persistence of Ad Hoc Testing” talk given at ICST conference by James Bach. Beginning of James’ abortive attempt to rehabilitate the term “ad hoc.”

1995 February: First appearance of “exploratory testing” on Usenet in message by Cem Kaner.

1995 Exploratory testing means learning, planning, and testing all at the same time. – James Bach (Market Driven Software Testing class)

1996 Simultaneous exploring, planning, and testing. – James Bach (Exploratory Testing class v1.0)

1999 An interactive process of concurrent product exploration, test design, and test execution. – James Bach (Exploratory Testing class v2.0)

2001(post WHET #1) The Bach View

Any testing to the extent that the tester actively controls the design of the tests as those tests are performed and uses information gained while testing to design new and better tests.

The Kaner View

Any testing to the extent that the tester actively controls the design of the tests as those tests are performed, uses information gained while testing to design new and better tests, and where the following conditions apply:

The tester is not required to use or follow any particular test materials or procedures.

The tester is not required to produce materials or procedures that enable test re-use by another tester or management review of the details of the work done.

– Resolution between Bach and Kaner following WHET #1 and BBST class at Satisfice Tech Center.

(To account for both of views, James started speaking of the “scripted/exploratory continuum” which has greatly helped in explaining ET to factory-style testers)

2003-2006 Simultaneous learning, test design, and test execution – Bach, Kaner

2006-2015 An approach to software testing that emphasizes the personal freedom and responsibility of each tester to continually optimize the value of his work by treating learning, test design and test execution as mutually supportive activities that run in parallel throughout the project. – (Bach/Bolton edit of Kaner suggestion)

2015 Exploratory testing is now a deprecated term within Rapid Software Testing methodology. See testing, instead. (In other words, all testing is exploratory to some degree. The definition of testing in the RST space is now: Evaluating a product by learning about it through exploration and experimentation, including to some degree: questioning, study, modeling, observation, inference, etc.)

there is no “manual testing”. There is no “manual programming”, either. Nor is there “manual management”.

As long as testers keep talking about “manual testing” and “automated testing”, we’re going to have this problem of managers wanting to “automate all the testing”. I’d recommend we stop talking about ourselves that way.

http://www.developsense.com/blog/2013/02/manual-and-automated-testing/

It’s cool to know that the machine can perform a check very precisely, or buhzillions of checks really quickly. But the risk analysis, the design of the check, the programming of the check, the choices about what to observe and how to observe it, the critical interpretation of the result and other aspects of the outcome—those are the parts that actually matter, the parts that are actually testing. And those are exactly the parts that are not automated.

As Cem Kaner so perfectly puts it, “When we’re testing, we’re not talking about something you’re doing with your hands, we’re talking about something you do with your head.”

we may also ignore myriad ways in which automation can assist and amplify and accelerate and enable all kinds of testing tasks: collecting data, setting up the product or the test suite, reconfiguring the product, installing the product, monitoring its behaviour, logging, overwhelming the product, fuzzing, parsing reports, visualizing output, generating data, randomizing input, automating repetitive tasks that are not checks, preparing comparable algorithms, comparing versions—and this list isn’t even really scratching the surface.

there is no such a thing called automated testing

-

We don’t talk about test automation; we find it unhelpful and misleading. We DO talk about automated checking, which is a part of testing that can be done entirely by a tool.

we don't say "exploratory testing" in most cases any more

we don't say "scripted testing" in most cases any more

There is testing. There are tools. Testers use tools. One kind of tool use is automated checking.

所以,

不要再提“自动化测试”、“手工测试”了,这些词语并不准确;

也尽量不要再说“探索性测试”、“脚本化测试”了,其实所有的测试都是探索性的,每个测试人员都可以使用脚本、使用工具。